Die Sentiment- oder Tonalitäts-Analyse ist die am meisten nachgefragte Funktion, die Tools für Media Monitoring und Social Listening bieten. Im Excellence Forum, unserem vertraulichen Benchmarking-Zirkel für Digital-Kommunikatoren, wird sie seit Jahren heiß diskutiert. Denn alle wollen sie, vom C-Level bis zum Experten – und gleichzeitig wissen viele, wie problematisch das Messverfahren ist. Kann „Sentiment“ als Kennzahl überhaupt funktionieren? Kann „positive Tonalität“ ein KPI sein, der die Güte von eigener Kommunikation und Markenresonanz bewertet? Unsere Antwort: Nein. Dieser Long-Read erklärt, warum.

„Sentiment“ wird sogar auf Top-Level diskutiert. Warum bloß?

Die meisten Digital-KPI haben das Problem, dass nur wenige Entscheider sie zu Gesicht bekommen und nur wenige Experten das Wissen haben, um sie korrekt zu interpretieren. Bei „Sentiment“ ist das anders. Über „Sentiment“ reden alle. Denn JEDER versteht, was damit gemeint ist, sofort, denn “Sentiment-Analyse” ist eine Alltagserfahrung. Jeder bewertet für sich tagtäglich alles Mögliche nach den Kategorien „Positiv / neutral / negativ“. Warum also sollte ein Tool das nicht auch mit „Content“ können?

So scheinen denken auch manche Kommunikationsleiter und erklären „positives Sentiment“ zum strategischen Ziel für die Unternehmenskommunikation. Entsprechende Kennzahlen (Net-Sentiment) werden in Management-Berichten verankert, die positiven Mentions (Erwähnungen) der eigenen Marke werden zur internen Währung für Erfolg. Dagegen werden negative „Mentions“ im Reporting gedreht und gewendet, denn sie sind, der Sentiment-Analyse zufolge, ein Indiz für weniger erfolgreiche Kommunikationsarbeit.

Einspruch gegen einen KPI “positives Sentiment” ist in Unternehmen kaum zu erwarten. Zu populär, zu augenfällig ist diese Kennzahl. Dass ein Einspruch notwendig wäre – das wissen alle Kommunikatoren, die ihr theoretisches Rüstzeug noch im Gepäck haben. Um unnütze Diskussionen zu vermeiden, ignorieren sie stattdessen die Ergebnisse der “Sentiment-Analyse”. Stillschweigend. Recht so.

„Sentiment-Analyse“ scheitert schon in der Theorie

Die “Grundgesetze” der Kommunikationstheorie besagen, dass die BEDEUTUNG eines Contents (auch seine „Tonalität“) vollständig von der Perspektive des Betrachters abhängt. Denn nur er / sie / es erzeugt (aus sich heraus) den Kontext, der einer Information eine bestimmte oder gar keine Bedeutung verleiht. (Beziehungsaspekt, 2. Axiom der Kommunikationstheorie, Watzlawick et al).

Die Kommunikations-Axiome setzen, dass Inhalt und Bedeutung eines „Contents“ von ihrer Natur her zwei grundverschiedene Dinge sind, die ein recht indirektes und auch autonomes Verhältnis zueinander pflegen. Eine On-/Off-Beziehung sozusagen. Sie (Bedeutung) in München. Er (Inhalt) in Hamburg. Man trifft sich nur im Kopf von Rezipienten, immer wechselnd. Und hat trotzdem eine feste Beziehung.

Man muss sich das klar machen: Die Information, der Content, hat mit Bedeutung und seiner Bewertung, z.B. als Tonalität, NICHTS zu tun. Es ist fast unmöglich, das auf Basis von Lebenserfahrung zu realisieren, ist realiter aber so. Darum schrieben Shannon & Weaver: „Insbesondere darf Information nicht mit Bedeutung verwechselt werden.” (A Mathematical Theory of Communication 1949)

Wenn Bedeutung und Sentiment vom subjektiven Beziehungsaspekt abhängen, welche Beziehung nimmt nun ein Monitoring und Listening Tool zum Absender eines Contents ein? Keine. Und welchen Kontext kann das Tool setzen? Nur einen objektivierten, also einen bedeutungslosen.

So funktioniert die Messung

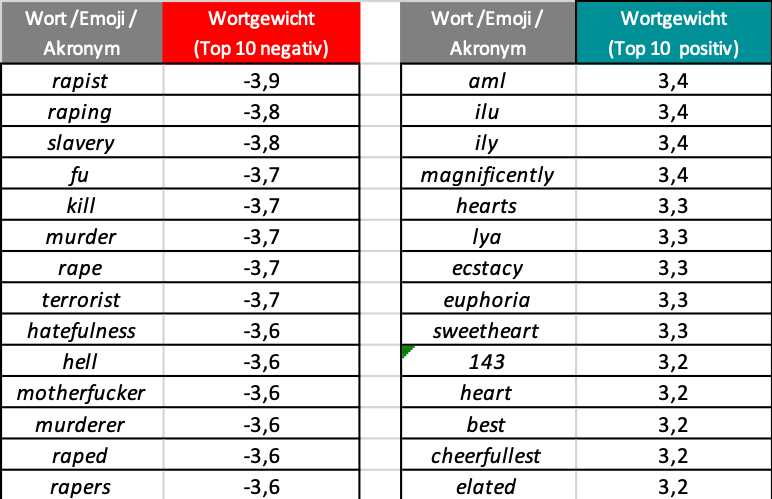

Die Bedeutungslosigkeit lässt sich schön illustrieren anhand der folgenden Tabelle. Sie zeigt die Tonalitäts-Spitzenreiter in „Vader“ (Danke, Dr. M!). Vader ist ein populärer Open Source-Algorithmus für Sentiment-Analyse, der seit 2015 an der Georgia Tech (Hutto/Gilbert) gepflegt wird.

In Vader ist jedem Wort ein fester Tonalitäts-Wert zugeordnet. Das geschieht in der rule-based “Old School” vorab. Wäre Vader ein Machine Learning Algorithmus, geschähe dieser Schitt erst nach der Analyse, das Prinzip aber, feste Zuweisung eines “Sentiment” zu jedem Wort, bliebe gleich. Die Maschinen erkennen Worte und addieren die mit ihnen verdrahteten negativen und postiven Wortgewichte. Zum Schluß wird ein Saldo gebildet und – voilà – ein Post mit 300 Worten hat mehr positives als negatives Wortgewicht.

Hier die 15 negativsten und die 15 positivsten von 8000 englischen Vader-Einträgen (“aml” = all my love”)

Und nun ein Fallbeispiel

Der Logik der Liste zufolge sind Wörter wie „Vergewaltiger“, „verurteilen“, „Strafe“ zweifelsfrei mit einer eher negativen Tonalität verbunden. Findet Vader also in einem Text diese drei Worte, dann wertet er für diese 3 Wörter ein “negatives Wortgewicht”.

Wendet man dieses Verfahren auf folgende fiktive Headline mit 5 Worten an, dann wird die Sentiment-Analyse sie mit gutem Grund als „stark negativ“ bewerten: „Vergewaltiger zu lebenslanger Strafe verurteilt“. Ist das wirklich negativ? Oder nicht doch positiv?

Nur Tools beantworten diese Frage eindeutig. Wohin das führt, sehen wir im Praxistest.

„Sentiment“ im Praxistest – wir suchen ein Extrembeispiel, den Worst Case für “Sentiment”.

Ob ein KPI gut funktionieren kann, oder ob er in die Irre führt, das beweist er in seinen Extremsituationen. Maximal positiv, maximal negativ – HIER muss sich zeigen, ob ein KPI richtig misst. Nehmen wir im Folgenden also ein Extremszenario für negative Berichterstattung an und testen die Messung von Sentiment. Dazu suchen wir ein kommunikatives Worst-Case Szenario. Suchen wir also:

- ein Unternehmen, das mit Sicherheit keine positiven Nachrichten und NUR NEGATIVE NACHRICHTEN vorzuweisen hat.

Dazu müsste es sich (mindestens) in einer akuten Krisensituation befinden, die alles, wirklich alles in der medialen Resonanz überlagert. Ein Betrugsskandal zum Beispiel, oder eine Insolvenz. - ein Unternehmen, das MASSENHAFTE NEGATIVE NACHRICHTEN erzeugt.

Die Krise muss also z.B. eine politische Bedeutung haben. Hohe Reichweite braucht aber auch Boulevard-Zutaten. Nehmen wir also Prominente, Verbrechen, Vorstände, organisierte Kriminalität, Fahndung, Flucht, Interpol, Südseeinseln… Einen weltweiten Skandal suchen wir, mitsamt den zugehörigen Boulevard-Medien (Portale, Click Baiter, Bild, Facebook usw.), ebenfalls weltweit. - ein Unternehmen mit LANG ANHALTENDER NEGATIVEN NACHRICHTEN.

Dauerkrise also, am besten über Monate. Geht es schlimmer? Kaum.

DAS also wäre ein ideal negativer Lauf, um den negativen Maximalwert zu ermitteln, den ein „KPI“ (Schlüsselindikator) namens Sentiment überhaupt erzielen kann. Und ja, es gibt dieses Worst Case Szenario tatsächlich. Es heißt Wirecard.

Worst Case in der Praxis – das „Sentiment“ von Wirecard.

„Trümmerhaufen“ Wirecard. Mit Insolvenzantrag direkt aus dem Dax30 in die Haftanstalt. Wirecard steht für den größten, umfassendsten, längsten und öffentlichsten organisierten Betrug in der europäischen Wirtschaftsgeschichte – und eine entsprechende Berichterstattung. Ein idealer Fall, um „Sentiment-Messung“ auf die Worst-Case-Probe zu stellen.

Wir schauen also in die Daten eines der marktführenden Monitoring-Tools. Es setzt, logisch, inzwischen auch „künstliche Intelligenz“ im Marketing ein. Man könne Sentiment jetzt noch besser analysieren und in noch schickere Diagramme einbinden.

Wir schließen letzte Reste von nur noch hypothetisch möglicher Positivität für Wirecard aus, indem wir nur die Erwähnungen des CEO analysieren: Doktor Markus Braun. Zu erwarten ist spätestens jetzt das absolute MAXIMUM an Negativität, das Sentiment-Armageddon, nicht zu unterbieten, DER Negativ-Benchmark überhaupt.

Werden es 100% negatives Sentiment sein? Oder ein Wert in diese Richtung? 90%, 80% vielleicht? Sind 70% fair? Aber halt, „Ironie kann nicht erkannt werden“ gehen wir also noch weiter herunter. Sagen wir: 60%, nein 50% für die Tonalität des Medienechos auf den Wirecard-CEO.

Ein Negativ-Benchmark von minus 50% in einem so eindeutigen Fall, ist das noch anspruchsvoll?

Hier das Ergebnis für Wirecard in maximaler Krisensituation.

- Wirecard mit CEO erzielte auf dem Höhepunkt der Krise, Mai bis Juli 2020, einen negativen Höchstwert von 29% negativen Mentions.

- Zum selben Zeitpunkt gab es 70%„neutrale Mentions“. Das war in der Woche um den 20. Juli.

- Im Durchschnitt Mai bis Juli 2020, dem Höhepunkt der Krise, waren es 14% negative Mentions.

- 14% negative Tonalität in einer (zurecht) weltweit skandalisierten, lang anhaltenden und dramatischen Unternehmenskrise?

Worüber macht Sentiment-Messung eigentlich eine Aussage?

Wie immer: eine Kennzahl macht eine Aussage über das, was und wie es gemessen wird.

Im Fall von Sentiment ist es eine Häufigkeit von Worten. Sie sind mit einem Wortgewicht verbunden, das Tonalitäts-Bewertungen erzeugt, obwohl subjektive (Interpretations-)Kontexte fehlen, die eine mögliche “Sentiment” überhaupt erst konstituieren. Darum ist Sentiment-Analyse irrelevant – und wird gleichzeitig so heiß diskutiert (mit subjektiven Kontexten, die um Deutungshoheit ringen).

Fazit: Sentiment-KPI kann keine validen Aussagen für das Management erzeugen.

Und selbst wenn sie valide wären – ein KPI, der in einem Worst Case Szenario einem Maximalwert von minus 29% erzeugt, eignet sich nicht zur datenbasierten Steuerung. Er schwankt nicht ausreichend und misst unscharf.

Sentiment-Daten gehören nicht in die Chefetage, können aber Nutzen im Maschinenraum erzeugen

Unsere Sentiment-Geschichte hat allerdings ein gutes Ende. Wer falsch misst, das aber konstant, kann mit solcherart erzeugten Daten dennoch Wert schöpfen. Denn in der Zeitreihen-Analyse sind starke Abweichungen vom Normalwert (acuh von “falschen KPI”) immer interessant. Das heißt: starke Ausschläge der Sentimentdaten lohnen IMMER ein genaueres Hinsehen, eine manuelle Prüfung des Contents und vor allem: des Kontextes.

Die Toolanbieter (und auch wir) setzen Bots ein, automatisierte Funktionen, die anhand der Abweichungen von Sentiment-Daten Prüffälle identifizieren, sie in Alarm-Mails einbinden und dann an Menschen abschicken (“feuern”), die sich die Inhalte rund um auffällige Mentions genauer anschauen und eine Bewertung vornehmen. Und zwar so, wie es sich, den Axiomen der Kommunikation folgend, gehört: MIT Kontext, MIT Subjektivität.

Die unsinnige Kennzahl „Sentiment“ findet zu guter Letzt also doch noch ein Zuhause – als Trüffelschwein, das aus dem Maschinenraum Fragen an Kommunikationsprofis stellt.

Sentiment-Analyse erleichert und beschleunigt eine interne Qualitätssicherung, die aber ein wichtiger Job nur für Menschen bleibt, die die Interssen des Unternehmen kennen.